Benchmark (ONNX) for LogisticRegression#

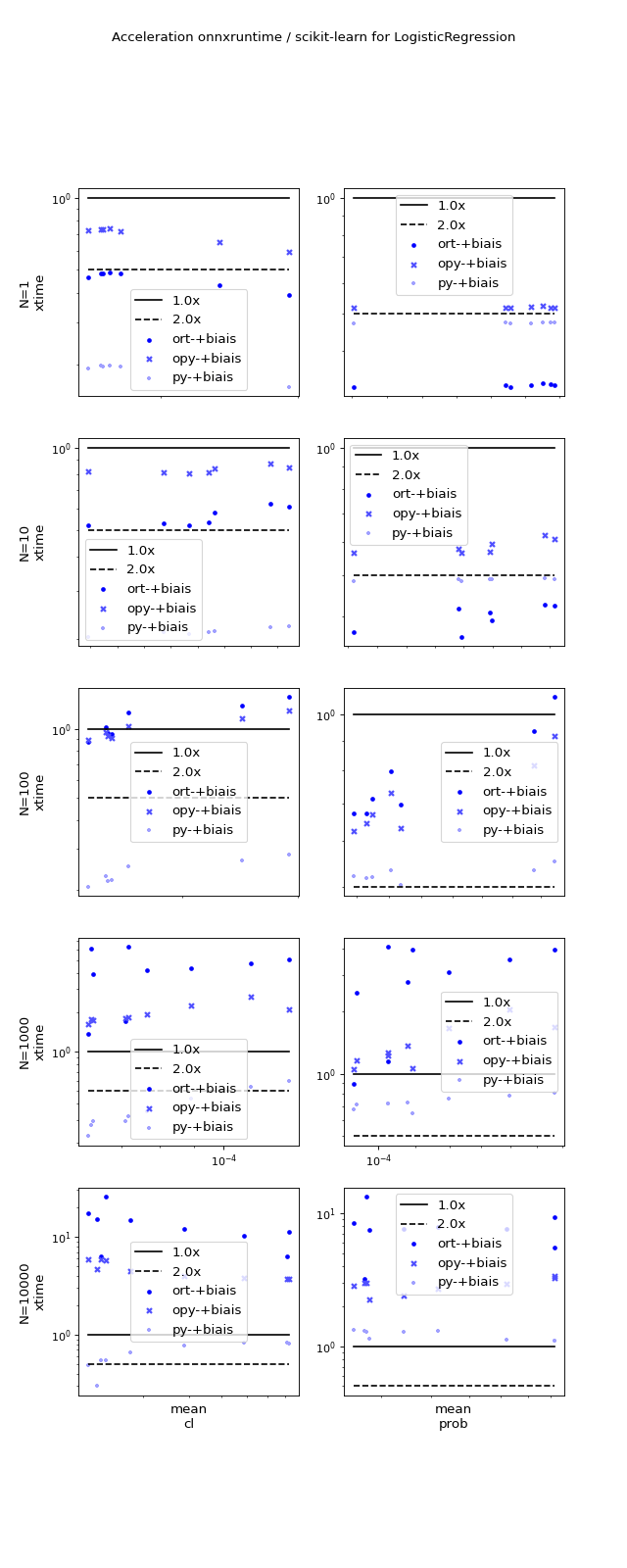

Overview#

(Source code, png, hires.png, pdf)

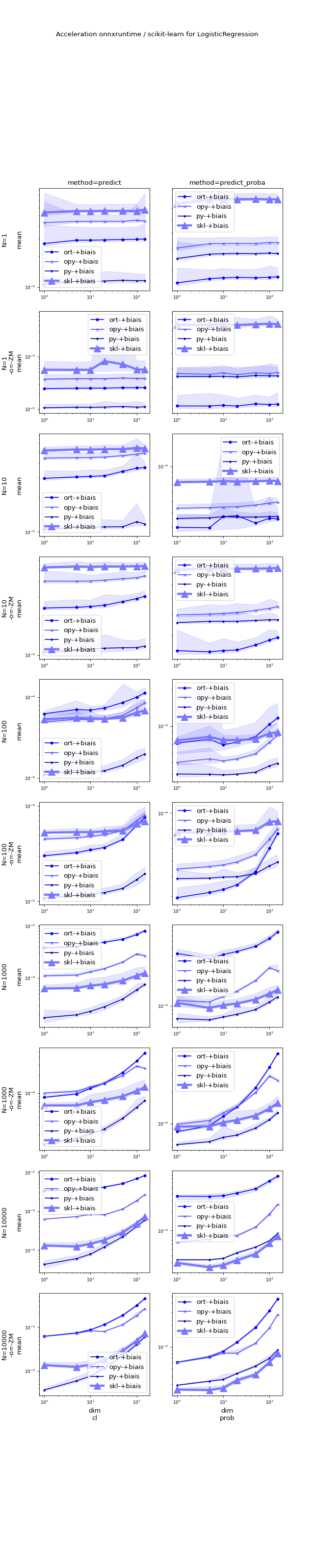

Detailed graphs#

(Source code, png, hires.png, pdf)

Configuration#

<<<

from pyquickhelper.pandashelper import df2rst

import pandas

name = os.path.join(

__WD__, "../../onnx/results/bench_plot_onnxruntime_logreg.time.csv")

df = pandas.read_csv(name)

print(df2rst(df, number_format=4))

>>>

name |

version |

value |

|---|---|---|

date |

2019-12-11 |

|

python |

3.7.2 (default, Mar 1 2019, 18:34:21) [GCC 6.3.0 20170516] |

|

platform |

linux |

|

OS |

Linux-4.9.0-8-amd64-x86_64-with-debian-9.6 |

|

machine |

x86_64 |

|

processor |

||

release |

4.9.0-8-amd64 |

|

architecture |

(‘64bit’, ‘’) |

|

mlprodict |

0.3 |

|

numpy |

1.17.4 |

openblas, language=c |

onnx |

1.6.34 |

opset=12 |

onnxruntime |

1.1.992 |

CPU-DNNL-MKL-ML |

pandas |

0.25.3 |

|

skl2onnx |

1.6.993 |

|

sklearn |

0.22 |

Raw results#

bench_plot_onnxruntime_logreg.csv

<<<

from pyquickhelper.pandashelper import df2rst

from pymlbenchmark.benchmark.bench_helper import bench_pivot

import pandas

name = os.path.join(

__WD__, "../../onnx/results/bench_plot_onnxruntime_logreg.perf.csv")

df = pandas.read_csv(name)

piv = bench_pivot(df).reset_index(drop=False)

piv['speedup_py'] = piv['skl'] / piv['onxpython_compiled']

piv['speedup_ort'] = piv['skl'] / piv['onxonnxruntime1']

print(df2rst(piv, number_format=4))

method |

skl_nb_base_estimators |

N |

dim |

fit_intercept |

onnx_options |

number |

count |

error_c |

onnx_opset |

error |

onxonnxruntime1 |

onxpython_compiled |

py |

skl |

speedup_py |

speedup_ort |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

1.069e-05 |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

2.669e-05 |

4.287e-05 |

||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

||||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

||||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

1.059e-05 |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

2.471e-05 |

3.76e-05 |

|||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

|||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

|||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

||||||

predict |

-1 |

1 |

1 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

1.137e-05 |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

2.881e-05 |

4.413e-05 |

||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

||||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

||||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

1.086e-05 |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

2.503e-05 |

3.834e-05 |

|||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

|||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

|||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

||||||

predict |

-1 |

1 |

5 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

1.136e-05 |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

2.882e-05 |

4.413e-05 |

||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

||||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

||||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

1 |

100 |

1 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

|||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

1.077e-05 |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

-1 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

2.516e-05 |

3.838e-05 |

|||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(37 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (1000 )-(1000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(69 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(15 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(17 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(2 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(28 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(29 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(32 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(34 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

0 |

12 |

ERROR: Dim (10000 )-(10000 ) - discrepencies between ‘skl’ and ‘onxpython_compiled’ for ‘(48 ‘predict’)’. |

||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |

100 |

1 |

-1 |

|||||||

predict |

-1 |

1 |

10 |

True |

{<class ‘sklearn.linear_model._logistic.LogisticRegression’>: {‘zipmap’: False}} |

1 |