2A.ml - Tree, hyperparamètres, overfitting#

Links: notebook, html, python, slides, GitHub

L’overfitting ou surapprentissage apparaît lorsque les prédictions sur de nouvelles données sont nettement moins bonnes que celles obtenus sur la base d’apprentissage. Les forêts aléatoires sont moins sujettes à l’overfitting que les arbres de décisions qui les composent. Quelques illustrations.

from jyquickhelper import add_notebook_menu

add_notebook_menu()

%matplotlib inline

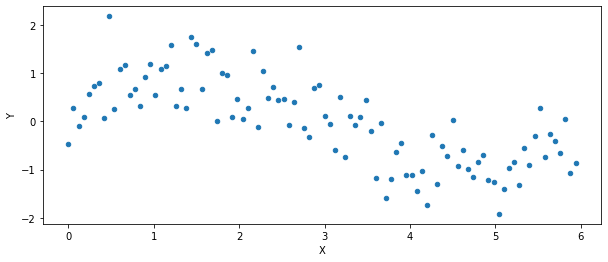

Données générées#

On génère un nuage de points .

import numpy, numpy.random, math

def generate_data(n):

import matplotlib.pyplot as plt

X = numpy.arange(n)/n*6

mat = numpy.random.normal(size=(n, 1))/2

si = numpy.sin(X).reshape((n, 1))

Y = mat + si

X = X.reshape((n,1))

data = numpy.hstack((X, Y))

return data, X, Y

import pandas

n = 100

data, X, Y = generate_data(n)

df = pandas.DataFrame(data, columns=["X", "Y"])

df.plot(x="X", y="Y", kind="scatter", figsize=(10,4));

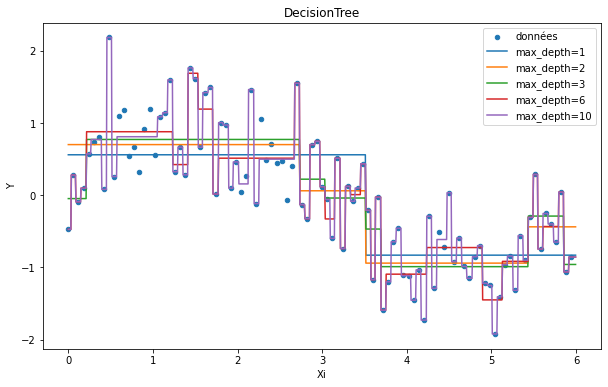

Différents arbres de décision#

On regarde l’influence de paramêtres sur la sortie du modèle résultant de son apprentissage.

max_depth#

Un arbre représente une fonction en escalier. La profondeur détermine le

nombre de feuilles, c’est-à-dire le nombre de valeurs possibles :

.

from sklearn.tree import DecisionTreeRegressor

ax = df.plot(x="X", y="Y", kind="scatter", figsize=(10,6), label="données", title="DecisionTree")

Xi = (numpy.arange(n*10)/n*6/10).reshape((n*10, 1))

for max_depth in [1, 2, 3, 6, 10]:

clr = DecisionTreeRegressor(max_depth=max_depth)

clr.fit(X, Y)

pred = clr.predict(Xi)

ex = pandas.DataFrame(Xi, columns=["Xi"])

ex["pred"] = pred

ex.sort_values("Xi").plot(x="Xi", y="pred", kind="line", label="max_depth=%d" % max_depth, ax=ax)

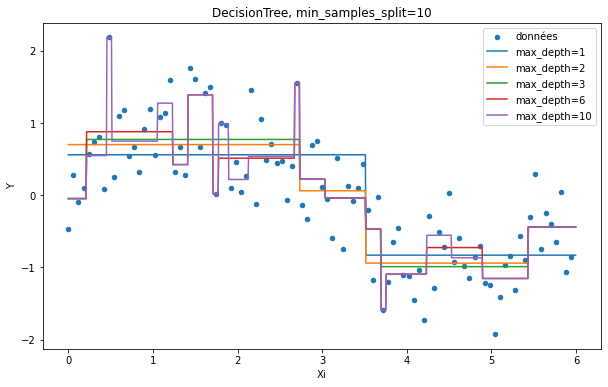

min_samples_split=10#

Chaque feuille d’un arbre prédit une valeur calculée à partir d’un ensemble d’observations. Ce nombre ne peut pas être inférieur à la valeur de ce paramètre. Ce mécanisme limite la possibilité de faire du surapprentissage en augmentant la représentativité de chaque feuille.

ax = df.plot(x="X", y="Y", kind="scatter", figsize=(10,6), label="données", title="DecisionTree, min_samples_split=10")

Xi = (numpy.arange(n*10)/n*6/10).reshape((n*10, 1))

for max_depth in [1, 2, 3, 6, 10]:

clr = DecisionTreeRegressor(max_depth=max_depth, min_samples_split=10)

clr.fit(X, Y)

pred = clr.predict(Xi)

ex = pandas.DataFrame(Xi, columns=["Xi"])

ex["pred"] = pred

ex.sort_values("Xi").plot(x="Xi", y="pred", kind="line", label="max_depth=%d" % max_depth, ax=ax)

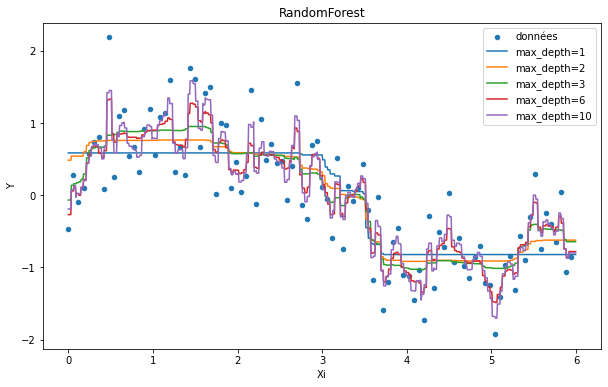

Random Forest#

On étudie les deux mêmes paramètres pour une random forest à ceci près que ce modèle est une somme pondérée des résultats produits par un ensemble d’arbres de décision.

max_depth#

from sklearn.ensemble import RandomForestRegressor

ax = df.plot(x="X", y="Y", kind="scatter", figsize=(10,6), label="données", title="RandomForest")

Xi = (numpy.arange(n*10)/n*6/10).reshape((n*10, 1))

for max_depth in [1, 2, 3, 6, 10]:

clr = RandomForestRegressor(max_depth=max_depth)

clr.fit(X, Y.ravel())

pred = clr.predict(Xi)

ex = pandas.DataFrame(Xi, columns=["Xi"])

ex["pred"] = pred

ex.sort_values("Xi").plot(x="Xi", y="pred", kind="line", label="max_depth=%d" % max_depth, ax=ax)

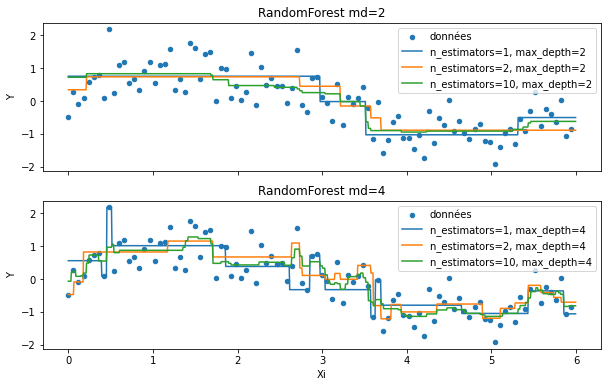

n_estimators#

n_estimators est le nombre d’itérations, c’est extactement le nombre d’arbres de décisions qui feront partie de la forêt dans le cas d’une régression ou d’une classification binaire.

from sklearn.ensemble import RandomForestRegressor

import matplotlib.pyplot as plt

f, axarr = plt.subplots(2, sharex=True)

df.plot(x="X", y="Y", kind="scatter", figsize=(10,6), label="données", title="RandomForest md=2", ax=axarr[0])

df.plot(x="X", y="Y", kind="scatter", figsize=(10,6), label="données", title="RandomForest md=4", ax=axarr[1])

Xi = (numpy.arange(n*10)/n*6/10).reshape((n*10, 1))

for i, max_depth in enumerate([2, 4]):

for n_estimators in [1, 2, 10]:

clr = RandomForestRegressor(n_estimators=n_estimators, max_depth=max_depth)

clr.fit(X, Y.ravel())

pred = clr.predict(Xi)

ex = pandas.DataFrame(Xi, columns=["Xi"])

ex["pred"] = pred

ex.sort_values("Xi").plot(x="Xi", y="pred", kind="line",

label="n_estimators=%d, max_depth=%d" % (n_estimators, max_depth), ax=axarr[i])

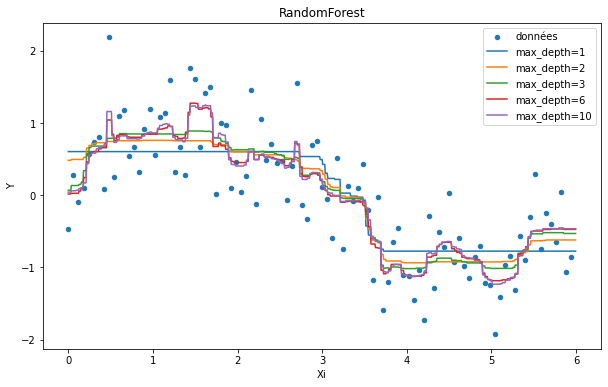

min_samples_split=10#

ax = df.plot(x="X", y="Y", kind="scatter", figsize=(10,6), label="données", title="RandomForest")

Xi = (numpy.arange(n*10)/n*6/10).reshape((n*10, 1))

for max_depth in [1, 2, 3, 6, 10]:

clr = RandomForestRegressor(max_depth=max_depth, min_samples_split=10)

clr.fit(X, Y.ravel())

pred = clr.predict(Xi)

ex = pandas.DataFrame(Xi, columns=["Xi"])

ex["pred"] = pred

ex.sort_values("Xi").plot(x="Xi", y="pred", kind="line", label="max_depth=%d" % max_depth, ax=ax)

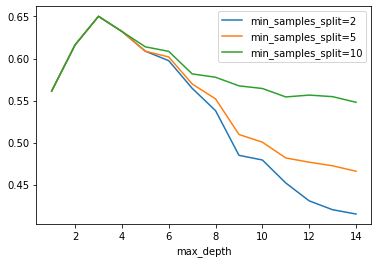

Base d’apprentissage et et base de test#

C’est un des principes de base en machine learning : ne jamais tester un modèle sur les données d’apprentissage. Avec suffisamment de feuilles, un arbre de décision apprendra la valeur à prédire pour chaque observation. Le modèle apprend le bruit. Où s’arrête l’information, où commence le bruit, il n’est pas toujours évident de fixer le curseur.

Decision Tree#

data, X, Y = generate_data(1000)

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, Y, test_size=0.33, random_state=42)

from sklearn.tree import DecisionTreeRegressor

from sklearn.metrics import r2_score

min_samples_splits = [2, 5, 10]

rows = []

for max_depth in range(1, 15):

d = dict(max_depth=max_depth)

for min_samples_split in min_samples_splits:

clr = DecisionTreeRegressor(max_depth=max_depth, min_samples_split=min_samples_split)

clr.fit(X_train, y_train)

pred = clr.predict(X_test)

score = r2_score(y_test, pred)

d["min_samples_split=%d" % min_samples_split] = score

rows.append(d)

pandas.DataFrame(rows).plot(x="max_depth", y=["min_samples_split=%d" % _ for _ in min_samples_splits]);

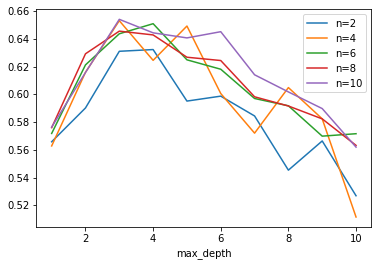

Le pic sur la base de test montre que passé un certain point, la performance décroît. A ce moment précis, le modèle commence à apprendre le bruit de la base d’apprentissage. Il overfitte. On remarque aussi que le modele overfitte moins lorsque min_samples_split=10.

Random Forest#

from sklearn.tree import DecisionTreeRegressor

from sklearn.metrics import r2_score

rows = []

for n_estimators in range(1, 11):

for max_depth in range(1, 11):

for min_samples_split in [2, 5, 10]:

clr = RandomForestRegressor(n_estimators=n_estimators, max_depth=max_depth)

clr.fit(X_train, y_train.ravel())

pred = clr.predict(X_test)

score = r2_score(y_test, pred)

d = dict(max_depth=max_depth)

d["n_estimators"] = n_estimators

d["min_samples_split"] = min_samples_split

d["score"] = score

rows.append(d)

pl = pandas.DataFrame(rows)

pl.head()

| max_depth | n_estimators | min_samples_split | score | |

|---|---|---|---|---|

| 0 | 1 | 1 | 2 | 0.542394 |

| 1 | 1 | 1 | 5 | 0.549491 |

| 2 | 1 | 1 | 10 | 0.532312 |

| 3 | 2 | 1 | 2 | 0.569562 |

| 4 | 2 | 1 | 5 | 0.578708 |

ax = pl[(pl.min_samples_split==10) & (pl.n_estimators==2)].plot(x="max_depth", y="score", label="n=2")

for i in (4,6,8,10):

pl[(pl.min_samples_split==10) & (pl.n_estimators==i)].plot(x="max_depth", y="score", label="n=%d"%i, ax=ax)

import matplotlib.pyplot as plt

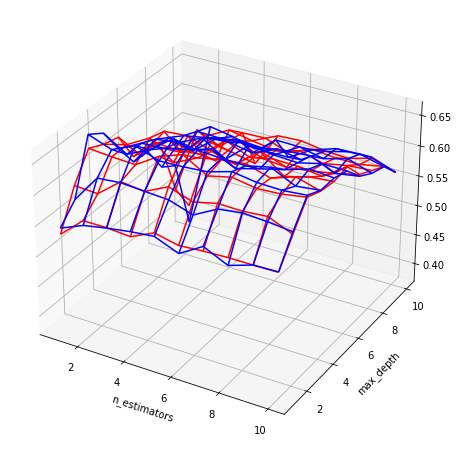

from mpl_toolkits.mplot3d import Axes3D

fig = plt.figure(figsize=(8, 8))

ax = fig.add_subplot(111, projection='3d')

for v, c in [(2, "b"), (10, "r")]:

piv = pl[pl.min_samples_split==v].pivot("n_estimators", "max_depth", "score")

pivX = piv.copy()

pivY = piv.copy()

for v in piv.columns:

pivX.loc[:, v] = piv.index

for v in piv.index:

pivY.loc[v, :] = piv.columns

ax.plot_wireframe(pivX.values, pivY.values, piv.values, color=c)

ax.set_xlabel("n_estimators")

ax.set_ylabel("max_depth");

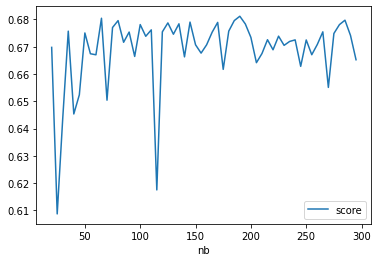

Réseaux de neurones#

Sur ce problème précis, les méthodes à base de gradient sont moins performantes. Elles paraissent également moins stables : la fonction d’erreur est plus agitée que celle obtenue pour les random forest. Ce type d’optimisation est plus sensible aux extrema locaux.

from sklearn.neural_network import MLPRegressor

from sklearn.metrics import r2_score

min_samples_splits = [2, 5, 10]

rows = []

for nb in range(20, 300, 5):

clr = MLPRegressor(hidden_layer_sizes=(nb,), activation="relu", max_iter=800)

clr.fit(X_train, y_train.ravel())

pred = clr.predict(X_test)

score = r2_score(y_test, pred)

if score > 0:

d = dict(nb=nb, score=score)

rows.append(d)

pandas.DataFrame(rows).plot(x="nb", y=["score"]);

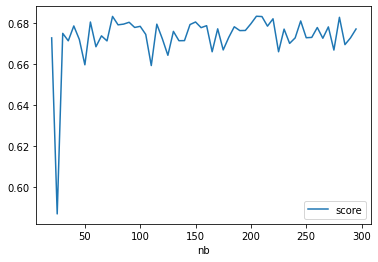

Réseaux de neurones, alpha=0#

from sklearn.neural_network import MLPRegressor

from sklearn.metrics import r2_score

min_samples_splits = [2, 5, 10]

rows = []

for nb in range(20, 300, 5):

clr = MLPRegressor(hidden_layer_sizes=(nb,), activation="relu",

alpha=0, tol=1e-6, max_iter=800)

clr.fit(X_train, y_train.ravel())

pred = clr.predict(X_test)

score = r2_score(y_test, pred)

if score > 0:

d = dict(nb=nb, score=score)

rows.append(d)

pandas.DataFrame(rows).plot(x="nb", y=["score"]);

C:Python395_x64libsite-packagessklearnneural_network_multilayer_perceptron.py:692: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (800) reached and the optimization hasn't converged yet. warnings.warn( C:Python395_x64libsite-packagessklearnneural_network_multilayer_perceptron.py:692: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (800) reached and the optimization hasn't converged yet. warnings.warn(

Exercice 1 : déterminer les paramètres optimaux pour cet exemple#

A vérifier avec grid_search, hyperopt, model_selection.

Exercice 2 : ajouter quelques points aberrants#

Intervalles de confiance#

On utilise le module forest-confidence-interval. Le module s’appuie sur le Jackknife pour estimer des intervalles de confiance. Il calcule un estimateur qui calcule une sortie en supprimant plusieurs fois un arbre lors de l’évaluation de la sortie de la forêt aléatoire. La théorie s’appuie sur un resampling de la base d’apprentissage que l’article considère comme équivalent à ceux effectués par scikit-learn pour générer chaque arbre.

L’idée s’appuie sur l’article Confidence Intervals for Random Forests: The Jackknife and the Infinitesimal Jackknife. Je pense qu’il reste un ou deux bugs car l’algorithme produit parfois des valeurs manquantes qui ne devraient pas se produire et les intervalles de confiance sont parfois très variables d’un apprentissage à l’autre.

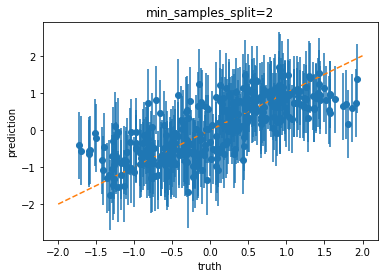

clr = RandomForestRegressor(min_samples_split=2)

clr.fit(X_train, y_train.ravel())

RandomForestRegressor()

try:

from sklearn.ensemble._forest import _generate_sample_indices as old_fct

# forestci is not up to date with the latest sklearn version

from sklearn.ensemble._forest import _generate_sample_indices as old_fct

def new_generate_sample_indices(random_state, n_samples, n_samples_bootstrap=None):

if n_samples_bootstrap is None:

n_samples_bootstrap = n_samples

return old_fct(random_state, n_samples, n_samples_bootstrap)

import sklearn.ensemble._forest

setattr(sklearn.ensemble._forest, '_generate_sample_indices', new_generate_sample_indices)

except ImportError:

pass

import forestci

pred = clr.predict(X_test)

mpg_inbag = forestci.calc_inbag(X_train.shape[0], clr)

mpg_V_IJ_unbiased = forestci.random_forest_error(

clr, X_train=X_train, X_test=X_test, inbag=mpg_inbag,

calibrate=True)

plt.errorbar(y_test, pred, yerr=numpy.sqrt(mpg_V_IJ_unbiased), fmt='o')

plt.plot([-2, 2], [-2, 2], '--')

plt.xlabel('truth')

plt.ylabel('prediction')

plt.title("min_samples_split=2");

C:Python395_x64libsite-packagesforestcicalibration.py:86: RuntimeWarning: overflow encountered in exp g_eta_raw = np.exp(np.dot(XX, eta)) * mask C:Python395_x64libsite-packagesnumpycorefromnumeric.py:86: RuntimeWarning: overflow encountered in reduce return ufunc.reduce(obj, axis, dtype, out, **passkwargs) C:Python395_x64libsite-packagesforestcicalibration.py:86: RuntimeWarning: overflow encountered in exp g_eta_raw = np.exp(np.dot(XX, eta)) * mask C:Python395_x64libsite-packagesnumpycorefromnumeric.py:86: RuntimeWarning: overflow encountered in reduce return ufunc.reduce(obj, axis, dtype, out, **passkwargs)

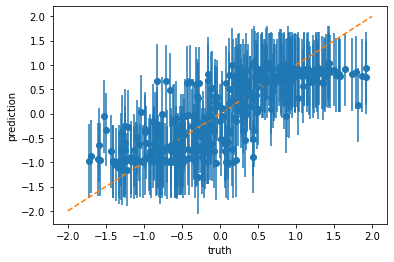

clr = RandomForestRegressor(n_estimators=40, max_depth=6)

clr.fit(X_train, y_train.ravel())

import forestci

pred = clr.predict(X_test)

mpg_inbag = forestci.calc_inbag(X_train.shape[0], clr)

mpg_V_IJ_unbiased = forestci.random_forest_error(clr, X_train=X_train, X_test=X_test, inbag=mpg_inbag)

plt.errorbar(y_test, pred, yerr=numpy.sqrt(mpg_V_IJ_unbiased), fmt='o')

plt.plot([-2, 2], [-2, 2], '--')

plt.xlabel('truth')

plt.ylabel('prediction');

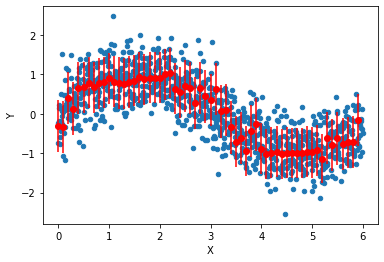

X_plt = numpy.arange(start=0, stop=6, step=0.1)

X_plt = X_plt.reshape((len(X_plt), 1))

pred = clr.predict(X_plt)

mpg_inbag = forestci.calc_inbag(X_train.shape[0], clr)

mpg_V_IJ_unbiased = forestci.random_forest_error(clr, X_train=X_train, X_test=X_plt, inbag=mpg_inbag)

df = pandas.DataFrame(numpy.hstack((X, Y)), columns=["X", "Y"])

ax = df.plot(x="X", y="Y", kind="scatter")

ax.errorbar(X_plt, pred, yerr=numpy.sqrt(mpg_V_IJ_unbiased), fmt='o', color="r")

plt.xlabel('X')

plt.ylabel('Y');

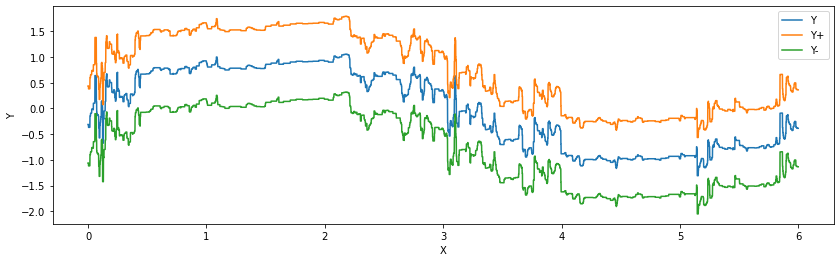

Xs = numpy.arange(start=0, stop=6, step=0.001)

Xs = Xs.reshape((len(Xs), 1))

ps = clr.predict(Xs)

ps = ps.reshape((len(ps), 1))

ci = forestci.random_forest_error(clr, X_train=X_train, X_test=Xs, inbag=mpg_inbag)

ci = numpy.sqrt(ci).reshape((len(ci), 1))

df = pandas.DataFrame(numpy.hstack((Xs, ps, ps + ci, ps-ci)), columns=["X", "Y", "Y+", "Y-"])

ax = df.plot(x="X", y=["Y", "Y+", "Y-"], kind="line", figsize=(14,4))

plt.xlabel('X')

plt.ylabel('Y');

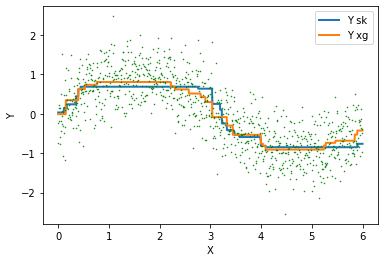

XGBoost#

clr = RandomForestRegressor(n_estimators=10, max_depth=2)

clr.fit(X_train, y_train.ravel())

from xgboost import XGBRegressor

clrx = XGBRegressor(n_estimators=10, max_depth=2)

clrx.fit(X_train, y_train.ravel())

C:Python395_x64libsite-packagesxgboostcompat.py:36: FutureWarning: pandas.Int64Index is deprecated and will be removed from pandas in a future version. Use pandas.Index with the appropriate dtype instead. from pandas import MultiIndex, Int64Index

XGBRegressor(base_score=0.5, booster='gbtree', colsample_bylevel=1,

colsample_bynode=1, colsample_bytree=1, enable_categorical=False,

gamma=0, gpu_id=-1, importance_type=None,

interaction_constraints='', learning_rate=0.300000012,

max_delta_step=0, max_depth=2, min_child_weight=1, missing=nan,

monotone_constraints='()', n_estimators=10, n_jobs=4,

num_parallel_tree=1, predictor='auto', random_state=0, reg_alpha=0,

reg_lambda=1, scale_pos_weight=1, subsample=1, tree_method='exact',

validate_parameters=1, verbosity=None)

Xs = numpy.arange(start=0, stop=6, step=0.001)

Xs = Xs.reshape((len(Xs), 1))

ps = clr.predict(Xs)

ps = ps.reshape((len(ps), 1))

psx = clrx.predict(Xs)

psx = psx.reshape((len(psx), 1))

df = pandas.DataFrame(numpy.hstack((Xs, ps, psx)), columns=["X", "Y sk", "Y xg"])

ax = df.plot(x="X", y=["Y sk", "Y xg"], kind="line", lw=2)

ax.plot(X, Y, 'g.', ms=1)

plt.xlabel('X')

plt.ylabel('Y');