Note

Click here to download the full example code

TopK benchmark#

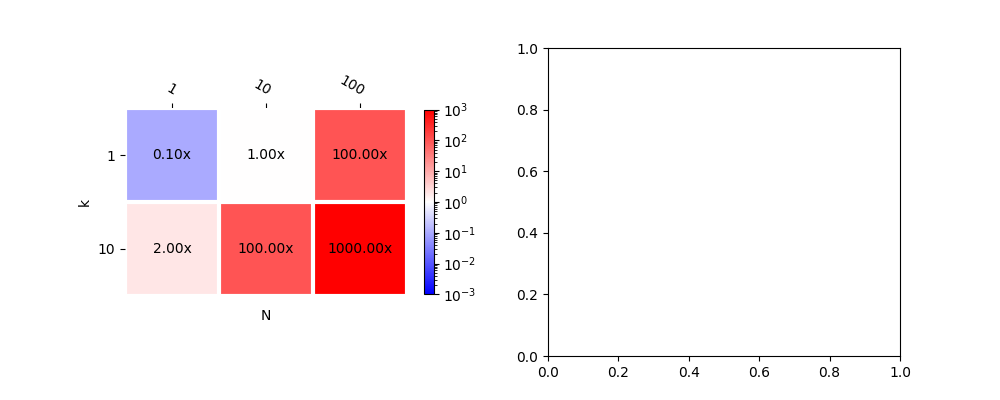

This example compares onnxruntime and mlprodict for an implementation of operator TopK. We measure two runtimes by computing a ratio between their time execution through the following kind of graphs.

Graph to compare performance#

from numpy.random import randn

import numpy

import matplotlib.pyplot as plt

from pandas import DataFrame

from onnxruntime import InferenceSession, __version__ as ort_version

from tqdm import tqdm

from cpyquickhelper.numbers import measure_time

from pyquickhelper.pycode.profiling import profile

from skl2onnx.algebra.onnx_ops import OnnxTopK_11

from skl2onnx.common.data_types import FloatTensorType

from skl2onnx.algebra.onnx_ops import OnnxTopK

from mlprodict.onnxrt.validate.validate_benchmark import benchmark_fct

from mlprodict.onnxrt import OnnxInference

from mlprodict.onnxrt.ops_cpu.op_topk import (

topk_sorted_implementation, topk_sorted_implementation_cpp)

from mlprodict import __version__ as mlp_version

from mlprodict.plotting.plotting import plot_benchmark_metrics

Available optimisation on this machine.

from mlprodict.testing.experimental_c_impl.experimental_c import code_optimisation

print(code_optimisation())

AVX-omp=8

Graph.

def plot_metric(metric, ax=None, xlabel="N", ylabel="k", middle=1.,

transpose=False, shrink=1.0, title=None):

ax, cbar = plot_benchmark_metrics(

metric, ax=ax, xlabel=xlabel, ylabel=ylabel, middle=middle,

transpose=transpose, cbar_kw={'shrink': shrink})

if title is not None:

ax.set_title(title)

return ax

data = {(1, 1): 0.1, (10, 1): 1, (1, 10): 2,

(10, 10): 100, (100, 1): 100, (100, 10): 1000}

fig, ax = plt.subplots(1, 2, figsize=(10, 4))

plot_metric(data, ax[0], shrink=0.6)

<AxesSubplot: xlabel='N', ylabel='k'>

plot_metric(data, ax[1], transpose=True)

<AxesSubplot: xlabel='k', ylabel='N'>

TopK in ONNX#

The following lines creates an ONNX graph using one TopK ONNX node. The outcome is the ONNX graph converted into json.

X32 = randn(100000, 100).astype(numpy.float32)

node = OnnxTopK_11('X', numpy.array([5], dtype=numpy.int64),

output_names=['dist', 'ind'])

model_onnx = node.to_onnx(

[('X', X32)], target_opset=12,

# shape inference does not seem to work in onnxruntime

# so we speccify the output shape

outputs=[('dist', X32[:1, :5]),

('ind', X32[:1, :5].astype(numpy.int64))])

model_onnx

ir_version: 6

producer_name: "skl2onnx"

producer_version: "1.13.1"

domain: "ai.onnx"

model_version: 0

graph {

node {

input: "X"

input: "To_TopKcst"

output: "dist"

output: "ind"

name: "To_TopK"

op_type: "TopK"

domain: ""

}

name: "OnnxTopK_11"

initializer {

dims: 1

data_type: 7

int64_data: 5

name: "To_TopKcst"

}

input {

name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

dim_value: 100

}

}

}

}

}

output {

name: "dist"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

dim_value: 5

}

}

}

}

}

output {

name: "ind"

type {

tensor_type {

elem_type: 7

shape {

dim {

}

dim {

dim_value: 5

}

}

}

}

}

}

opset_import {

domain: ""

version: 11

}

That gives…

oinf = OnnxInference(model_onnx, runtime="python")

res = oinf.run({'X': X32})

dist, ind = res['dist'], res['ind']

dist[:2], ind[:2]

(array([[2.9598944, 2.1381311, 2.010453 , 1.9549123, 1.9296134],

[2.168306 , 1.9842782, 1.9551469, 1.8612137, 1.6905841]],

dtype=float32), array([[81, 7, 90, 49, 14],

[48, 20, 77, 53, 93]]))

With onnxruntime.

sess = InferenceSession(model_onnx.SerializeToString())

dist, ind = sess.run(None, {'X': X32})

dist[:2], ind[:2]

(array([[2.9598944, 2.1381311, 2.010453 , 1.9549123, 1.9296134],

[2.168306 , 1.9842782, 1.9551469, 1.8612137, 1.6905841]],

dtype=float32), array([[81, 7, 90, 49, 14],

[48, 20, 77, 53, 93]], dtype=int64))

Let’s compare two implementations.

def benchmark(X, fct1, fct2, N, repeat=10, number=10):

def ti(n):

if n <= 1:

return 50

if n <= 1000:

return 2

if n <= 10000:

return 0.51

return 0.11

# to warm up the engine

time_kwargs = {n: dict(repeat=10, number=10) for n in N[:2]}

benchmark_fct(fct1, X, time_kwargs=time_kwargs, skip_long_test=False)

benchmark_fct(fct2, X, time_kwargs=time_kwargs, skip_long_test=False)

# real measure

time_kwargs = {n: dict(repeat=int(repeat * ti(n)),

number=int(number * ti(n))) for n in N}

res1 = benchmark_fct(fct1, X, time_kwargs=time_kwargs,

skip_long_test=False)

res2 = benchmark_fct(fct2, X, time_kwargs=time_kwargs,

skip_long_test=False)

res = {}

for r in sorted(res1):

r1 = res1[r]

r2 = res2[r]

ratio = r2['ttime'] / r1['ttime']

res[r] = ratio

return res

N = [1, 10, 100, 1000, 10000, 100000]

res = benchmark(X32, lambda x: sess.run(None, {'X': x}),

lambda x: oinf.run({'X': x}), N=N)

res

{1: 1.5083332913744698, 10: 1.4151957338951786, 100: 36.552590364056236, 1000: 14.071824402120802, 10000: 2.9472532147646975, 100000: 1.0841909476754}

The implementation in mlprodict is faster when the number of rows grows. It is faster for 1 rows, for many rows, the implementation uses openmp to parallelize.

C++ implementation vs numpy#

scikit-learn uses numpy to compute the top k elements.

res = benchmark(X32, lambda x: topk_sorted_implementation(x, 5, 1, 0),

lambda x: topk_sorted_implementation_cpp(x, 5, 1, 0), N=N)

res

{1: 0.3022641439658741, 10: 0.3051468584177248, 100: 20.282515692671545, 1000: 2.5687795181097637, 10000: 0.3324244877649639, 100000: 0.12383379717969736}

It seems to be faster too. Let’s profile.

xr = randn(1000000, 100)

text = profile(lambda: topk_sorted_implementation(xr, 5, 1, 0),

pyinst_format='text')[1]

print(text)

_ ._ __/__ _ _ _ _ _/_ Recorded: 05:37:59 AM Samples: 5

/_//_/// /_\ / //_// / //_'/ // Duration: 3.054 CPU time: 3.046

/ _/ v4.4.0

Program: somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_onnx_topk.py

3.053 profile ../pycode/profiling.py:455

`- 3.053 <lambda> plot_op_onnx_topk.py:177

[15 frames hidden] plot_op_onnx_topk, mlprodict, <__arra...

3.053 topk_sorted_implementation mlprodict/onnxrt/ops_cpu/op_topk.py:18

Parallelisation#

We need to disable the parallelisation to really compare both implementation.

# In[11]:

def benchmark_test(X, fct1, fct2, N, K, repeat=10, number=10):

res = {}

for k in tqdm(K):

def f1(x, k=k): return fct1(x, k=k)

def f2(x, k=k): return fct2(x, k=k)

r = benchmark(X32, f1, f2, N=N, repeat=repeat, number=number)

for n, v in r.items():

res[n, k] = v

return res

K = [1, 2, 5, 10, 15]

N = [1, 2, 3, 10, 100, 1000, 10000]

bench_para = benchmark_test(

X32, (lambda x, k: topk_sorted_implementation_cpp(

x, k=k, axis=1, largest=0, th_para=100000000)),

(lambda x, k: topk_sorted_implementation_cpp(

x, k=k, axis=1, largest=0, th_para=1)),

N=N, K=K)

bench_para

0%| | 0/5 [00:00<?, ?it/s]

20%|## | 1/5 [00:25<01:41, 25.43s/it]

40%|#### | 2/5 [00:51<01:16, 25.63s/it]

60%|###### | 3/5 [01:12<00:46, 23.44s/it]

80%|######## | 4/5 [01:36<00:23, 23.78s/it]

100%|##########| 5/5 [02:05<00:00, 25.64s/it]

100%|##########| 5/5 [02:05<00:00, 25.06s/it]

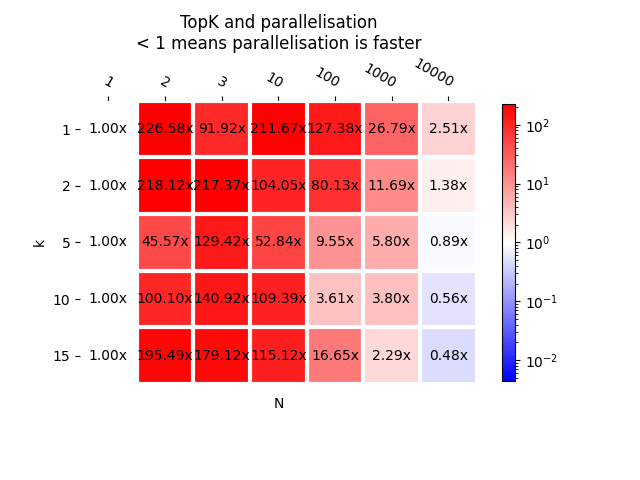

{(1, 1): 0.997243362701949, (2, 1): 226.58457110313003, (3, 1): 91.92390684419328, (10, 1): 211.67434719837902, (100, 1): 127.37722134692386, (1000, 1): 26.785530001156285, (10000, 1): 2.5146208558874927, (1, 2): 0.9962467190337722, (2, 2): 218.124131585238, (3, 2): 217.37006961389514, (10, 2): 104.04522063606211, (100, 2): 80.1330086175351, (1000, 2): 11.69194050874918, (10000, 2): 1.383289176506112, (1, 5): 1.0010563440552807, (2, 5): 45.56928988158557, (3, 5): 129.42103493881507, (10, 5): 52.83872202882926, (100, 5): 9.548581827308116, (1000, 5): 5.800428535116234, (10000, 5): 0.885106131544692, (1, 10): 0.9967472591608297, (2, 10): 100.1002797952114, (3, 10): 140.91542404967376, (10, 10): 109.38904775339395, (100, 10): 3.611852043191122, (1000, 10): 3.8047143079712686, (10000, 10): 0.5559328268135855, (1, 15): 0.9990115922091529, (2, 15): 195.4857850387784, (3, 15): 179.12140269422176, (10, 15): 115.1242519809762, (100, 15): 16.64535616443629, (1000, 15): 2.294822623305785, (10000, 15): 0.47639555042649123}

As a graph.

plot_metric(bench_para, transpose=False, title="TopK and parallelisation\n"

"< 1 means parallelisation is faster", shrink=0.75)

<AxesSubplot: title={'center': 'TopK and parallelisation\n< 1 means parallelisation is faster'}, xlabel='N', ylabel='k'>

This is somehow expected. First column is closed to 1 as it is the same code being compared. Next columns are red, meaning the parallelisation does not help, the parallelisation helps for bigger N, as least more than 100.

Parallellisation with ONNX#

We replicate the same experiment with an ONNX graph.

k_ = numpy.array([3], dtype=numpy.int64)

node = OnnxTopK_11('X', 'k',

output_names=['dist', 'ind'])

model_onnx = node.to_onnx(

[('X', X32), ('k', k_)], target_opset=12,

# shape inference does not seem to work in onnxruntime

# so we speccify the output shape

outputs=[('dist', X32[:1, :5]),

('ind', X32[:1, :5].astype(numpy.int64))])

Test

oinf_no_para = OnnxInference(model_onnx, runtime="python")

res = oinf_no_para.run({'X': X32, 'k': k_})

dist, ind = res['dist'], res['ind']

dist[:2], ind[:2]

(array([[2.9598944, 2.1381311, 2.010453 ],

[2.168306 , 1.9842782, 1.9551469]], dtype=float32), array([[81, 7, 90],

[48, 20, 77]]))

Let’s play with the thresholds triggering the parallelisation.

oinf_para = OnnxInference(model_onnx, runtime="python")

oinf_no_para.sequence_[0].ops_.th_para = 100000000

oinf_para.sequence_[0].ops_.th_para = 1

Results.

bench_onnx_para = benchmark_test(

X32, (lambda x, k: oinf_no_para.run(

{'X': x, 'k': numpy.array([k], dtype=numpy.int64)})),

(lambda x, k: oinf_para.run(

{'X': x, 'k': numpy.array([k], dtype=numpy.int64)})),

N=N, K=K)

bench_onnx_para

0%| | 0/5 [00:00<?, ?it/s]

20%|## | 1/5 [00:53<03:32, 53.06s/it]

40%|#### | 2/5 [01:45<02:37, 52.56s/it]

60%|###### | 3/5 [02:36<01:43, 51.88s/it]

80%|######## | 4/5 [03:30<00:52, 52.92s/it]

100%|##########| 5/5 [04:29<00:00, 54.82s/it]

100%|##########| 5/5 [04:29<00:00, 53.81s/it]

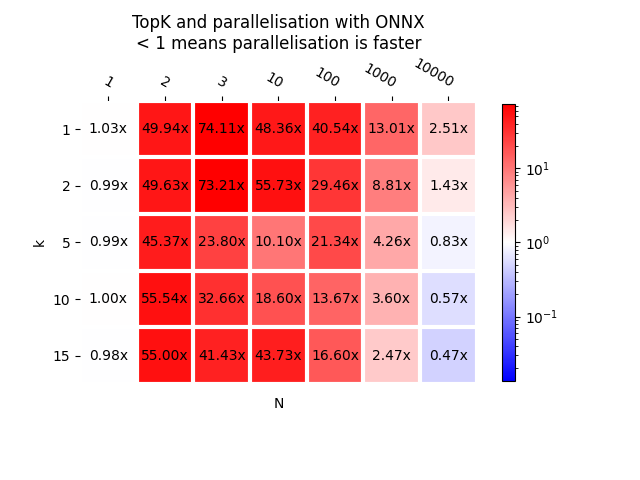

{(1, 1): 1.0304580306159878, (2, 1): 49.94166156920545, (3, 1): 74.11336667552264, (10, 1): 48.364799464861576, (100, 1): 40.5433443649383, (1000, 1): 13.00597437586643, (10000, 1): 2.505624326800244, (1, 2): 0.9945959157914268, (2, 2): 49.63366940774368, (3, 2): 73.21115554330184, (10, 2): 55.732164224214294, (100, 2): 29.458365072648725, (1000, 2): 8.814622182422703, (10000, 2): 1.4328127257160936, (1, 5): 0.9906022980403884, (2, 5): 45.37288422575921, (3, 5): 23.795563169153176, (10, 5): 10.102643397735074, (100, 5): 21.336256768633252, (1000, 5): 4.257789241612367, (10000, 5): 0.8271605271058224, (1, 10): 1.0020099813327028, (2, 10): 55.54110099167795, (3, 10): 32.66331502640634, (10, 10): 18.59519684908865, (100, 10): 13.673627683090166, (1000, 10): 3.5972219239846908, (10000, 10): 0.5727267030719756, (1, 15): 0.9780110362069955, (2, 15): 55.00178008497019, (3, 15): 41.43330113339258, (10, 15): 43.72503173604008, (100, 15): 16.604009681469364, (1000, 15): 2.4737542264389663, (10000, 15): 0.467104742482297}

As a graph.

plot_metric(bench_onnx_para, transpose=False,

title="TopK and parallelisation with ONNX\n< 1 means "

"parallelisation is faster", shrink=0.75)

<AxesSubplot: title={'center': 'TopK and parallelisation with ONNX\n< 1 means parallelisation is faster'}, xlabel='N', ylabel='k'>

Pretty much the same results.

onnxruntime vs mlprodict (no parallelisation)#

sess = InferenceSession(model_onnx.SerializeToString())

bench_ort = benchmark_test(

X32, (lambda x, k: sess.run(

None, {'X': x, 'k': numpy.array([k], dtype=numpy.int64)})),

(lambda x, k: oinf_no_para.run(

{'X': x, 'k': numpy.array([k], dtype=numpy.int64)})),

N=N, K=K)

bench_ort

0%| | 0/5 [00:00<?, ?it/s]

20%|## | 1/5 [00:37<02:29, 37.33s/it]

40%|#### | 2/5 [01:14<01:52, 37.34s/it]

60%|###### | 3/5 [01:53<01:15, 37.80s/it]

80%|######## | 4/5 [02:32<00:38, 38.36s/it]

100%|##########| 5/5 [03:13<00:00, 39.43s/it]

100%|##########| 5/5 [03:13<00:00, 38.71s/it]

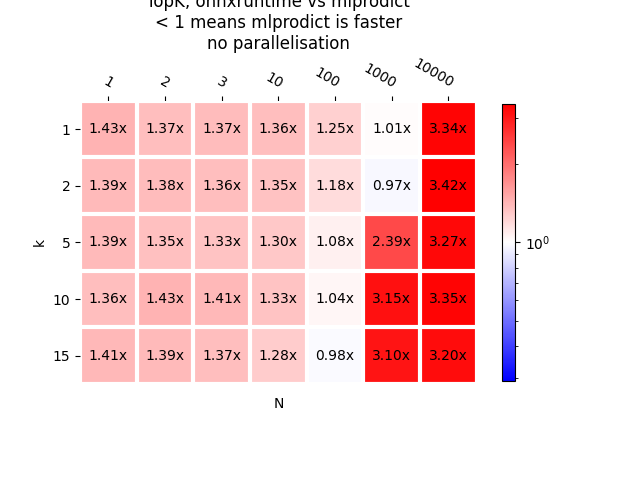

{(1, 1): 1.4306392307118136, (2, 1): 1.3717018849234592, (3, 1): 1.3747369732888854, (10, 1): 1.362807784298525, (100, 1): 1.2534840081241705, (1000, 1): 1.0108201696263532, (10000, 1): 3.336249801848911, (1, 2): 1.3946318060803726, (2, 2): 1.376662552949948, (3, 2): 1.3634604104417583, (10, 2): 1.3454144949760856, (100, 2): 1.1828691736261554, (1000, 2): 0.9693088537186199, (10000, 2): 3.4172867761235035, (1, 5): 1.3879813226404116, (2, 5): 1.3519935473986922, (3, 5): 1.3328492007153798, (10, 5): 1.3021673039869042, (100, 5): 1.0775188156494098, (1000, 5): 2.389343390224701, (10000, 5): 3.2696226672717503, (1, 10): 1.364066712037827, (2, 10): 1.4296014620227306, (3, 10): 1.4065117861503844, (10, 10): 1.3310711159901298, (100, 10): 1.0434829429908827, (1000, 10): 3.150829015832846, (10000, 10): 3.345964666076073, (1, 15): 1.4074986459121739, (2, 15): 1.3944408854547616, (3, 15): 1.3679987828475966, (10, 15): 1.2765606600689001, (100, 15): 0.9805961977799841, (1000, 15): 3.104376905230185, (10000, 15): 3.2048673020723673}

As a graph.

plot_metric(bench_ort, transpose=False,

title="TopK, onnxruntime vs mlprodict\n< 1 means mlprodict "

"is faster\nno parallelisation", shrink=0.75)

<AxesSubplot: title={'center': 'TopK, onnxruntime vs mlprodict\n< 1 means mlprodict is faster\nno parallelisation'}, xlabel='N', ylabel='k'>

It seems the implementation of operator TopK in onnxruntime 1.1.1 can be improved.

Versions:

ort_version, mlp_version

('1.13.1', '0.9.1887')

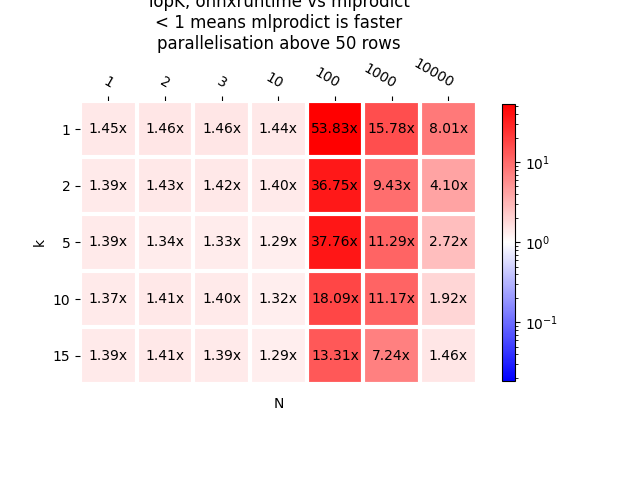

And with parallelisation above 50 rows.

oinf_para.sequence_[0].ops_.th_para = 50

bench_ort_para = benchmark_test(

X32, (lambda x, k: sess.run(

None, {'X': x, 'k': numpy.array([k], dtype=numpy.int64)})),

(lambda x, k: oinf_para.run(

{'X': x, 'k': numpy.array([k], dtype=numpy.int64)})),

N=N, K=K)

bench_ort_para

0%| | 0/5 [00:00<?, ?it/s]

20%|## | 1/5 [00:41<02:44, 41.19s/it]

40%|#### | 2/5 [01:21<02:02, 40.92s/it]

60%|###### | 3/5 [02:03<01:22, 41.42s/it]

80%|######## | 4/5 [02:46<00:41, 42.00s/it]

100%|##########| 5/5 [03:30<00:00, 42.63s/it]

100%|##########| 5/5 [03:30<00:00, 42.12s/it]

{(1, 1): 1.4505604906243672, (2, 1): 1.4596357430167772, (3, 1): 1.4566174023803524, (10, 1): 1.4400696744220443, (100, 1): 53.83216334206836, (1000, 1): 15.778742448383511, (10000, 1): 8.012290919500888, (1, 2): 1.3908225022460539, (2, 2): 1.4293890297329124, (3, 2): 1.4211736084279543, (10, 2): 1.3968391988106705, (100, 2): 36.7544062199547, (1000, 2): 9.42559431899878, (10000, 2): 4.097449436599585, (1, 5): 1.387115108552004, (2, 5): 1.340867245264949, (3, 5): 1.3298039649826499, (10, 5): 1.288572239467006, (100, 5): 37.757093447277015, (1000, 5): 11.286215002840258, (10000, 5): 2.715295770727163, (1, 10): 1.3743960920046079, (2, 10): 1.4143733862778989, (3, 10): 1.4002088746346506, (10, 10): 1.3199700467013458, (100, 10): 18.086155663753615, (1000, 10): 11.169446028505359, (10000, 10): 1.9221655706667453, (1, 15): 1.3936436816906703, (2, 15): 1.4127692386764572, (3, 15): 1.388192823682933, (10, 15): 1.2883068628297358, (100, 15): 13.306132998552997, (1000, 15): 7.2409594632544865, (10000, 15): 1.461522128520271}

As a graph.

plot_metric(bench_ort_para, transpose=False,

title="TopK, onnxruntime vs mlprodict\n< 1 means mlprodict "

"is faster\nparallelisation above 50 rows", shrink=0.75)

<AxesSubplot: title={'center': 'TopK, onnxruntime vs mlprodict\n< 1 means mlprodict is faster\nparallelisation above 50 rows'}, xlabel='N', ylabel='k'>

- onnxruntime and mlprodict implement the same algorithm.

The only difference comes from the threshold which trigger the parallelisation. It should depend on the machine. That explains the difference in time for 100 observations.

X = numpy.array([

[0, 1, 2, 3],

[4, 5, 6, 7],

[8, 9, 10, 11],

], dtype=numpy.float32)

K = numpy.array([3], dtype=numpy.int64)

node = OnnxTopK('X', K, output_names=['values', 'indices'],

op_version=12)

onx = node.to_onnx([('X', FloatTensorType())])

py_topk = OnnxInference(onx, runtime="python_compiled")

ort_topk = InferenceSession(onx.SerializeToString())

Check the outputs.

r1 = py_topk.run({'X': X})

r1

{'values': array([[ 3., 2., 1.],

[ 7., 6., 5.],

[11., 10., 9.]], dtype=float32), 'indices': array([[3, 2, 1],

[3, 2, 1],

[3, 2, 1]])}

r2 = ort_topk.run(None, {'X': X})

r2

[array([[ 3., 2., 1.],

[ 7., 6., 5.],

[11., 10., 9.]], dtype=float32), array([[3, 2, 1],

[3, 2, 1],

[3, 2, 1]], dtype=int64)]

Some figures.

bs = []

bs.append(measure_time(lambda: py_topk.run({'X': X}),

context=globals(), div_by_number=True))

bs[-1]['c'] = 'py'

bs[-1]

{'average': 4.906759643927216e-05, 'deviation': 9.002059404027586e-07, 'min_exec': 4.850869998335838e-05, 'max_exec': 5.1643881015479564e-05, 'repeat': 10, 'number': 50, 'ttime': 0.0004906759643927216, 'context_size': 2272, 'c': 'py'}

bs.append(measure_time(lambda: ort_topk.run(None, {'X': X}),

context=globals(), div_by_number=True))

bs[-1]['c'] = 'or'

bs[-1]

{'average': 4.793880949728191e-05, 'deviation': 5.419752606740932e-07, 'min_exec': 4.753009881824255e-05, 'max_exec': 4.9395898822695014e-05, 'repeat': 10, 'number': 50, 'ttime': 0.0004793880949728191, 'context_size': 2272, 'c': 'or'}

X = numpy.random.randn(10000, 100).astype(numpy.float32)

bs.append(measure_time(lambda: py_topk.run({'X': X}),

context=globals(), div_by_number=True))

bs[-1]['c'] = 'py-100'

bs[-1]

{'average': 0.007579522713785991, 'deviation': 0.00031576592226994387, 'min_exec': 0.006823993700090796, 'max_exec': 0.007875203518196941, 'repeat': 10, 'number': 50, 'ttime': 0.07579522713785991, 'context_size': 2272, 'c': 'py-100'}

bs.append(measure_time(lambda: ort_topk.run(None, {'X': X}),

context=globals(), div_by_number=True))

bs[-1]['c'] = 'ort-100'

bs[-1]

{'average': 0.0020190685361158103, 'deviation': 1.0210657449903279e-05, 'min_exec': 0.002008609820622951, 'max_exec': 0.0020338385622017084, 'repeat': 10, 'number': 50, 'ttime': 0.020190685361158103, 'context_size': 2272, 'c': 'ort-100'}

df = DataFrame(bs)

df

Total running time of the script: ( 14 minutes 54.203 seconds)