Note

Click here to download the full example code

Compares implementations of ReduceSum#

This example compares the numpy.sum from numpy, to onnxruntime implementation. If available, tensorflow and pytorch are included as well.

Available optimisation#

The code shows which parallelisation optimisation could be used, AVX or SSE and the number of available processors.

import numpy

import pandas

import matplotlib.pyplot as plt

from onnxruntime import InferenceSession

from skl2onnx.common.data_types import FloatTensorType

from skl2onnx.algebra.onnx_ops import OnnxReduceSumApi11

from cpyquickhelper.numbers import measure_time

from tqdm import tqdm

from mlprodict.testing.experimental_c_impl.experimental_c import (

code_optimisation, custom_reducesum_rk_float)

print(code_optimisation())

AVX-omp=8

ReduceSum implementations#

try:

from tensorflow.math import reduce_sum as tf_reduce_sum

from tensorflow import convert_to_tensor

except ImportError:

tf_reduce_sum = None

try:

from torch import sum as torch_sum, from_numpy

except ImportError:

torch_sum = None

def build_ort_reducesum(axes, op_version=14): # opset=13, 14, ...

node = OnnxReduceSumApi11('x', axes=axes, op_version=op_version,

output_names=['z'])

onx = node.to_onnx(inputs=[('x', FloatTensorType())],

target_opset=op_version)

sess = InferenceSession(onx.SerializeToString())

return lambda x, y: sess.run(None, {'x': x})

def loop_fct(fct, xs, ys):

for x, y in zip(xs, ys):

fct(x, y)

def benchmark_op(axes, repeat=5, number=5, name="ReduceSum", shape_fct=None,

custom_impl=False):

if shape_fct is None:

def shape_fct(dim):

return (3, dim, 1, 128, 64)

ort_fct = build_ort_reducesum(axes)

res = []

for dim in tqdm([8, 16, 32, 64, 100, 128, 200,

256, 400, 512, 1024]):

shape = shape_fct(dim)

n_arrays = 10 if dim < 512 else 4

xs = [numpy.random.rand(*shape).astype(numpy.float32)

for _ in range(n_arrays)]

ys = [numpy.array(axes, dtype=numpy.int64)

for _ in range(n_arrays)]

info = dict(axes=axes, shape=shape)

# numpy

ctx = dict(

xs=xs, ys=ys,

fct=lambda x, y: numpy.sum(x, *y),

loop_fct=loop_fct)

obs = measure_time(

"loop_fct(fct, xs, ys)",

div_by_number=True, context=ctx, repeat=repeat, number=number)

obs['dim'] = dim

obs['fct'] = 'numpy'

obs.update(info)

res.append(obs)

# onnxruntime

ctx['fct'] = ort_fct

obs = measure_time(

"loop_fct(fct, xs, ys)",

div_by_number=True, context=ctx, repeat=repeat, number=number)

obs['dim'] = dim

obs['fct'] = 'ort'

obs.update(info)

res.append(obs)

if custom_impl:

if axes != (0, ):

raise RuntimeError(

f"Unexpected axes={axes!r}.")

ctx['fct'] = lambda x, y: custom_reducesum_rk_float(x)

ctx['xs'] = [x.reshape((x.shape[0], -1)).copy() for x in xs]

obs = measure_time(

"loop_fct(fct, xs, ys)",

div_by_number=True, context=ctx, repeat=repeat, number=number)

obs['dim'] = dim

obs['fct'] = 'custom'

obs.update(info)

res.append(obs)

if tf_reduce_sum is not None:

# tensorflow

ctx['fct'] = tf_reduce_sum

ctx['xs'] = [convert_to_tensor(x) for x in xs]

ctx['ys'] = ys

obs = measure_time(

"loop_fct(fct, xs, ys)",

div_by_number=True, context=ctx, repeat=repeat, number=number)

obs['dim'] = dim

obs['fct'] = 'tf'

obs.update(info)

res.append(obs)

if torch_sum is not None:

def torch_sum1(x, y):

return torch_sum(x, y[0])

def torch_sum2(x, y):

return torch_sum(torch_sum(x, y[1]), y[0])

# torch

ctx['fct'] = torch_sum1 if len(axes) == 1 else torch_sum2

ctx['xs'] = [from_numpy(x) for x in xs]

ctx['ys'] = ys # [from_numpy(y) for y in ys]

obs = measure_time(

"loop_fct(fct, xs, ys)",

div_by_number=True, context=ctx, repeat=repeat, number=number)

obs['dim'] = dim

obs['fct'] = 'torch'

obs.update(info)

res.append(obs)

# Dataframes

shape_name = str(shape).replace(str(dim), "N")

df = pandas.DataFrame(res)

df.columns = [_.replace('dim', 'N') for _ in df.columns]

piv = df.pivot('N', 'fct', 'average')

rs = piv.copy()

for c in ['ort', 'torch', 'tf', 'tf_copy']:

if c in rs.columns:

rs[c] = rs['numpy'] / rs[c]

rs['numpy'] = 1.

# Graphs.

fig, ax = plt.subplots(1, 2, figsize=(12, 4))

piv.plot(logx=True, logy=True, ax=ax[0],

title=f"{name} benchmark\n{shape_name!r} - {axes!r} lower better")

ax[0].legend(prop={"size": 9})

rs.plot(logx=True, logy=True, ax=ax[1],

title="%s Speedup, baseline=numpy\n%r - %r"

" higher better" % (name, shape_name, axes))

ax[1].plot([min(rs.index), max(rs.index)], [0.5, 0.5], 'g--')

ax[1].plot([min(rs.index), max(rs.index)], [2., 2.], 'g--')

ax[1].legend(prop={"size": 9})

return df, rs, ax

dfs = []

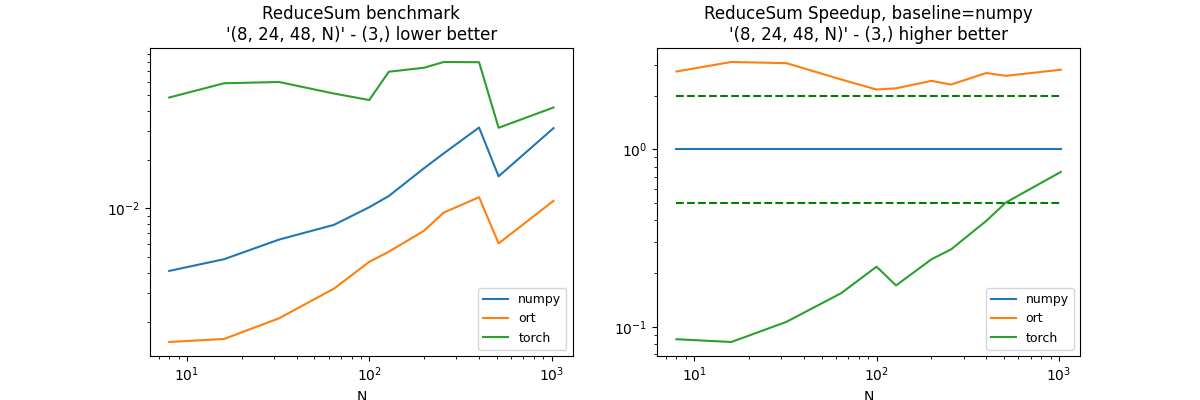

Reduction on a particular case KR#

Consecutive axis not reduced and consecutive reduced axis are merged. KR means kept axis - reduced axis

(8, 24, 48, N), axis=(3, )#

axes = (3, )

df, piv, ax = benchmark_op(axes, shape_fct=lambda dim: (8, 24, 48, dim))

dfs.append(df)

df.pivot("fct", "N", "average")

0%| | 0/11 [00:00<?, ?it/s]

9%|9 | 1/11 [00:01<00:13, 1.37s/it]

18%|#8 | 2/11 [00:03<00:13, 1.55s/it]

27%|##7 | 3/11 [00:04<00:13, 1.66s/it]

36%|###6 | 4/11 [00:06<00:11, 1.68s/it]

45%|####5 | 5/11 [00:08<00:10, 1.71s/it]

55%|#####4 | 6/11 [00:10<00:09, 1.97s/it]

64%|######3 | 7/11 [00:13<00:09, 2.28s/it]

73%|#######2 | 8/11 [00:17<00:07, 2.62s/it]

82%|########1 | 9/11 [00:21<00:06, 3.04s/it]

91%|######### | 10/11 [00:22<00:02, 2.66s/it]

100%|##########| 11/11 [00:25<00:00, 2.77s/it]

100%|##########| 11/11 [00:25<00:00, 2.35s/it]

somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_reducesum.py:151: FutureWarning: In a future version of pandas all arguments of DataFrame.pivot will be keyword-only.

piv = df.pivot('N', 'fct', 'average')

somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_reducesum.py:189: FutureWarning: In a future version of pandas all arguments of DataFrame.pivot will be keyword-only.

df.pivot("fct", "N", "average")

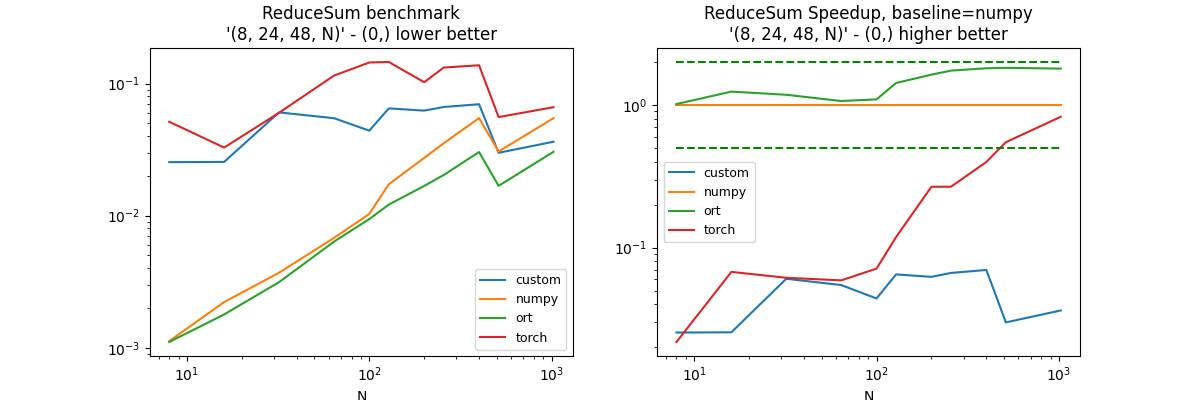

Reduction on a particular case RK#

Consecutive axis not reduced and consecutive reduced axis are merged. RK means reduced axis - kept axis

(8, 24, 48, N), axis=(0, )#

axes = (0, )

df, piv, ax = benchmark_op(axes, shape_fct=lambda dim: (8, 24, 48, dim),

custom_impl=True)

dfs.append(df)

df.pivot("fct", "N", "average")

0%| | 0/11 [00:00<?, ?it/s]

9%|9 | 1/11 [00:01<00:19, 2.00s/it]

18%|#8 | 2/11 [00:03<00:15, 1.77s/it]

27%|##7 | 3/11 [00:06<00:19, 2.45s/it]

36%|###6 | 4/11 [00:11<00:23, 3.35s/it]

45%|####5 | 5/11 [00:17<00:24, 4.11s/it]

55%|#####4 | 6/11 [00:23<00:24, 4.86s/it]

64%|######3 | 7/11 [00:29<00:20, 5.13s/it]

73%|#######2 | 8/11 [00:36<00:17, 5.71s/it]

82%|########1 | 9/11 [00:44<00:13, 6.51s/it]

91%|######### | 10/11 [00:48<00:05, 5.68s/it]

100%|##########| 11/11 [00:53<00:00, 5.67s/it]

100%|##########| 11/11 [00:53<00:00, 4.89s/it]

somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_reducesum.py:151: FutureWarning: In a future version of pandas all arguments of DataFrame.pivot will be keyword-only.

piv = df.pivot('N', 'fct', 'average')

somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_reducesum.py:206: FutureWarning: In a future version of pandas all arguments of DataFrame.pivot will be keyword-only.

df.pivot("fct", "N", "average")

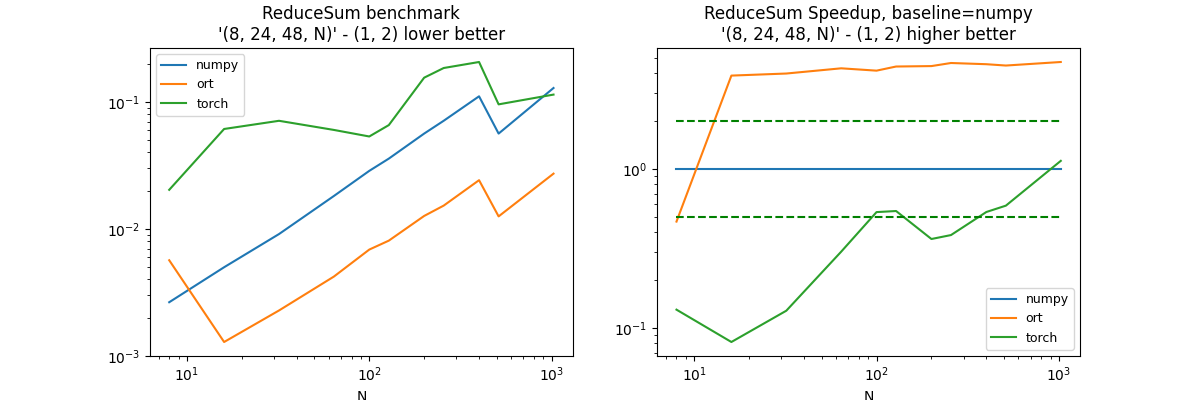

Reduction on a particular case KRK#

Consecutive axis not reduced and consecutive reduced axis are merged. KRK means kept axis - reduced axis - kept axis,

(8, 24, 48, N), axis=(1, 2)#

axes = (1, 2)

df, piv, ax = benchmark_op(axes, shape_fct=lambda dim: (8, 24, 48, dim))

dfs.append(df)

df.pivot("fct", "N", "average")

0%| | 0/11 [00:00<?, ?it/s]

9%|9 | 1/11 [00:00<00:07, 1.36it/s]

18%|#8 | 2/11 [00:02<00:11, 1.32s/it]

27%|##7 | 3/11 [00:04<00:13, 1.69s/it]

36%|###6 | 4/11 [00:06<00:13, 1.90s/it]

45%|####5 | 5/11 [00:09<00:12, 2.10s/it]

55%|#####4 | 6/11 [00:12<00:12, 2.41s/it]

64%|######3 | 7/11 [00:18<00:14, 3.60s/it]

73%|#######2 | 8/11 [00:25<00:14, 4.80s/it]

82%|########1 | 9/11 [00:35<00:12, 6.24s/it]

91%|######### | 10/11 [00:39<00:05, 5.73s/it]

100%|##########| 11/11 [00:47<00:00, 6.32s/it]

100%|##########| 11/11 [00:47<00:00, 4.31s/it]

somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_reducesum.py:151: FutureWarning: In a future version of pandas all arguments of DataFrame.pivot will be keyword-only.

piv = df.pivot('N', 'fct', 'average')

somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_reducesum.py:222: FutureWarning: In a future version of pandas all arguments of DataFrame.pivot will be keyword-only.

df.pivot("fct", "N", "average")

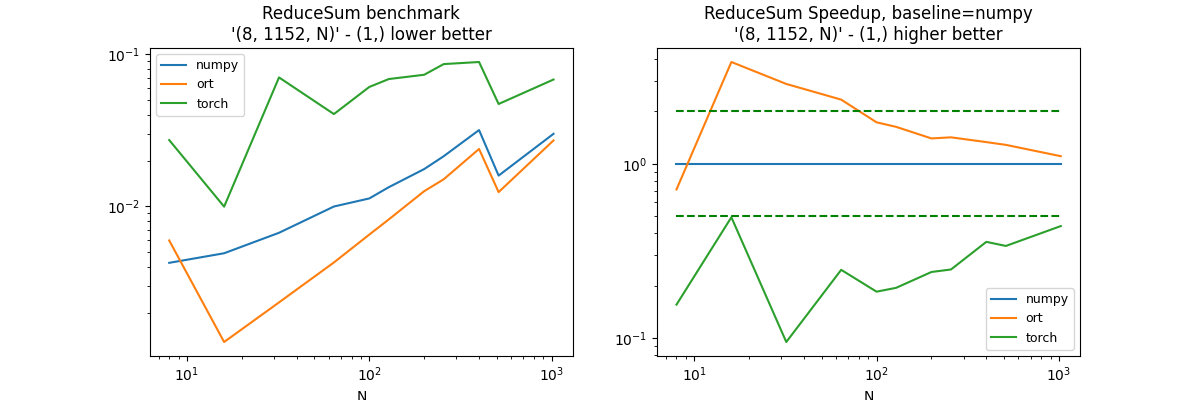

(8, 24 * 48, N), axis=1#

axes = (1, )

df, piv, ax = benchmark_op(axes, shape_fct=lambda dim: (8, 24 * 48, dim))

dfs.append(df)

df.pivot("fct", "N", "average")

0%| | 0/11 [00:00<?, ?it/s]

9%|9 | 1/11 [00:00<00:09, 1.04it/s]

18%|#8 | 2/11 [00:01<00:05, 1.52it/s]

27%|##7 | 3/11 [00:03<00:10, 1.30s/it]

36%|###6 | 4/11 [00:04<00:09, 1.39s/it]

45%|####5 | 5/11 [00:07<00:10, 1.68s/it]

55%|#####4 | 6/11 [00:09<00:09, 1.98s/it]

64%|######3 | 7/11 [00:12<00:09, 2.33s/it]

73%|#######2 | 8/11 [00:16<00:08, 2.75s/it]

82%|########1 | 9/11 [00:20<00:06, 3.30s/it]

91%|######### | 10/11 [00:23<00:03, 3.01s/it]

100%|##########| 11/11 [00:27<00:00, 3.33s/it]

100%|##########| 11/11 [00:27<00:00, 2.49s/it]

somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_reducesum.py:151: FutureWarning: In a future version of pandas all arguments of DataFrame.pivot will be keyword-only.

piv = df.pivot('N', 'fct', 'average')

somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_reducesum.py:231: FutureWarning: In a future version of pandas all arguments of DataFrame.pivot will be keyword-only.

df.pivot("fct", "N", "average")

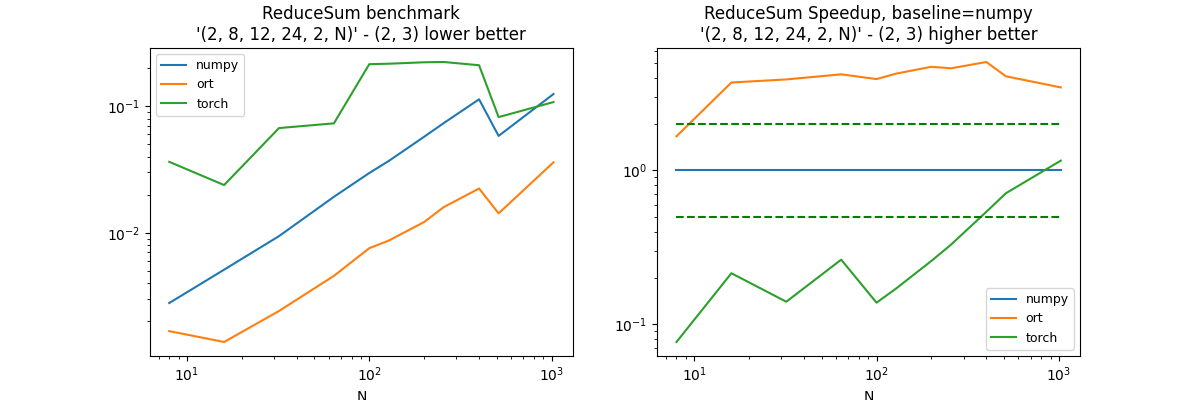

(2, 8, 12, 24, 2, N), axis=(2, 3)#

axes = (2, 3)

df, piv, ax = benchmark_op(axes, shape_fct=lambda dim: (2, 8, 12, 24, 2, dim))

dfs.append(df)

df.pivot("fct", "N", "average")

0%| | 0/11 [00:00<?, ?it/s]

9%|9 | 1/11 [00:01<00:10, 1.04s/it]

18%|#8 | 2/11 [00:01<00:08, 1.11it/s]

27%|##7 | 3/11 [00:03<00:11, 1.43s/it]

36%|###6 | 4/11 [00:06<00:13, 1.88s/it]

45%|####5 | 5/11 [00:13<00:21, 3.56s/it]

55%|#####4 | 6/11 [00:19<00:23, 4.68s/it]

64%|######3 | 7/11 [00:27<00:22, 5.68s/it]

73%|#######2 | 8/11 [00:36<00:19, 6.55s/it]

82%|########1 | 9/11 [00:45<00:14, 7.49s/it]

91%|######### | 10/11 [00:49<00:06, 6.52s/it]

100%|##########| 11/11 [00:57<00:00, 6.86s/it]

100%|##########| 11/11 [00:57<00:00, 5.23s/it]

somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_reducesum.py:151: FutureWarning: In a future version of pandas all arguments of DataFrame.pivot will be keyword-only.

piv = df.pivot('N', 'fct', 'average')

somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_reducesum.py:240: FutureWarning: In a future version of pandas all arguments of DataFrame.pivot will be keyword-only.

df.pivot("fct", "N", "average")

Reduction on a particular case RKRK#

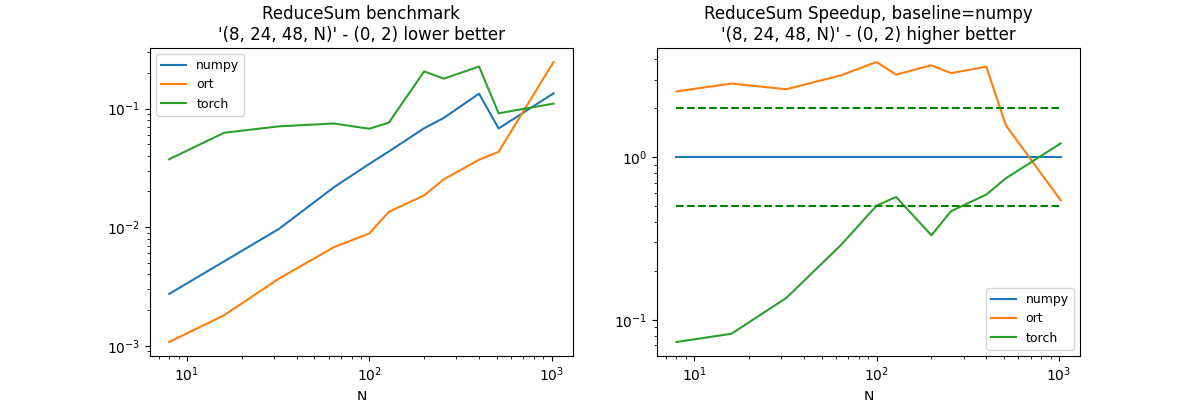

(8, 24, 48, N), axis=(0, 2)#

axes = (0, 2)

df, piv, ax = benchmark_op(axes, shape_fct=lambda dim: (8, 24, 48, dim))

dfs.append(df)

df.pivot("fct", "N", "average")

0%| | 0/11 [00:00<?, ?it/s]

9%|9 | 1/11 [00:01<00:10, 1.05s/it]

18%|#8 | 2/11 [00:02<00:13, 1.48s/it]

27%|##7 | 3/11 [00:05<00:14, 1.80s/it]

36%|###6 | 4/11 [00:07<00:15, 2.17s/it]

45%|####5 | 5/11 [00:10<00:14, 2.46s/it]

55%|#####4 | 6/11 [00:14<00:14, 2.86s/it]

64%|######3 | 7/11 [00:22<00:17, 4.46s/it]

73%|#######2 | 8/11 [00:29<00:16, 5.51s/it]

82%|########1 | 9/11 [00:40<00:14, 7.17s/it]

91%|######### | 10/11 [00:46<00:06, 6.66s/it]

100%|##########| 11/11 [00:59<00:00, 8.66s/it]

100%|##########| 11/11 [00:59<00:00, 5.40s/it]

somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_reducesum.py:151: FutureWarning: In a future version of pandas all arguments of DataFrame.pivot will be keyword-only.

piv = df.pivot('N', 'fct', 'average')

somewhere/workspace/mlprodict/mlprodict_UT_39_std/_doc/examples/plot_op_reducesum.py:252: FutureWarning: In a future version of pandas all arguments of DataFrame.pivot will be keyword-only.

df.pivot("fct", "N", "average")

Conclusion#

Some of the configurations should be investigated. l-reducesum-problem1. The reduction on tensorflow in one dimension seems to be lazy.

merged = pandas.concat(dfs)

name = "reducesum"

merged.to_csv(f"plot_{name}.csv", index=False)

merged.to_excel(f"plot_{name}.xlsx", index=False)

plt.savefig(f"plot_{name}.png")

plt.show()

Total running time of the script: ( 4 minutes 43.395 seconds)