Note

Click here to download the full example code

Benchmark operator Slice#

This short code compares the execution of the operator Slice on CPU and GPU in three configurations.

A simple example#

import numpy

from numpy.testing import assert_almost_equal

from pandas import DataFrame, pivot_table

from onnxruntime import InferenceSession, get_device

from onnxruntime.capi._pybind_state import ( # pylint: disable=E0611

OrtValue as C_OrtValue)

from skl2onnx.common.data_types import FloatTensorType

from skl2onnx.algebra.onnx_ops import OnnxSlice, OnnxAdd, OnnxMul

from cpyquickhelper.numbers.speed_measure import measure_time

from mlprodict.testing.experimental_c_impl.experimental_c import code_optimisation

from mlprodict.onnxrt import OnnxInference

from mlprodict.plotting.plotting_onnx import plot_onnx

from onnxcustom.utils.onnxruntime_helper import get_ort_device

from tqdm import tqdm

print([code_optimisation(), get_device()])

['AVX-omp=8', 'CPU']

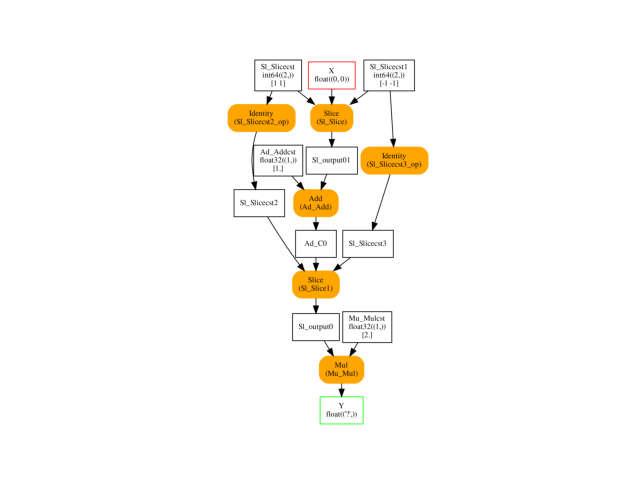

The graph to compare.

def build_ort_op(op_version=14, save=None, slices=None): # opset=13, 14, ...

if slices is None:

starts = numpy.array([1, 1], dtype=numpy.int64)

ends = numpy.array([-1, -1], dtype=numpy.int64)

axes = None

else:

starts, ends = slices

if starts[0] is None:

indexes = [i for i in range(len(starts)) if starts[i] is not None]

starts = numpy.array(

[n for n in starts if n is not None], dtype=numpy.int64)

ends = numpy.array(

[n for n in ends if n is not None], dtype=numpy.int64)

axes = numpy.array(indexes, dtype=numpy.int64)

else:

starts = numpy.array(starts, dtype=numpy.int64)

ends = numpy.array(ends, dtype=numpy.int64)

axes = None

if axes is None:

node1 = OnnxSlice('X', starts, ends, op_version=op_version)

else:

node1 = OnnxSlice('X', starts, ends, axes, op_version=op_version)

node2 = OnnxAdd(node1, numpy.array([1], dtype=numpy.float32),

op_version=op_version)

if axes is None:

node3 = OnnxSlice(node2, starts, ends, op_version=op_version)

else:

node3 = OnnxSlice(node2, starts, ends, axes, op_version=op_version)

node4 = OnnxMul(node3, numpy.array([2], dtype=numpy.float32),

op_version=op_version, output_names=['Y'])

onx = node4.to_onnx(inputs=[('X', FloatTensorType([None, None]))],

target_opset=op_version)

return onx

onx = build_ort_op()

plot_onnx(onx)

<AxesSubplot: >

Execution on CPU#

x = numpy.random.rand(50, 50).astype(numpy.float32)

oinf = OnnxInference(onx)

oinf.run({'X': x}, verbose=1, fLOG=print)

+ki='Sl_Slicecst': (2,) (dtype=int64 min=1 max=1)

+ki='Sl_Slicecst1': (2,) (dtype=int64 min=-1 max=-1)

+ki='Ad_Addcst': (1,) (dtype=float32 min=1.0 max=1.0)

+ki='Mu_Mulcst': (1,) (dtype=float32 min=2.0 max=2.0)

-- OnnxInference: run 6 nodes with 1 inputs

Onnx-Identity(Sl_Slicecst) -> Sl_Slicecst2 (name='Sl_Slicecst2_op')

+kr='Sl_Slicecst2': (2,) (dtype=int64 min=1 max=1)

Onnx-Identity(Sl_Slicecst1) -> Sl_Slicecst3 (name='Sl_Slicecst3_op')

+kr='Sl_Slicecst3': (2,) (dtype=int64 min=-1 max=-1)

Onnx-Slice(X, Sl_Slicecst, Sl_Slicecst1) -> Sl_output01 (name='Sl_Slice')

+kr='Sl_output01': (48, 48) (dtype=float32 min=9.875727846520022e-05 max=0.9997695088386536)

Onnx-Add(Sl_output01, Ad_Addcst) -> Ad_C0 (name='Ad_Add')

+kr='Ad_C0': (48, 48) (dtype=float32 min=1.000098705291748 max=1.9997694492340088)

Onnx-Slice(Ad_C0, Sl_Slicecst2, Sl_Slicecst3) -> Sl_output0 (name='Sl_Slice1')

+kr='Sl_output0': (46, 46) (dtype=float32 min=1.000098705291748 max=1.9997694492340088)

Onnx-Mul(Sl_output0, Mu_Mulcst) -> Y (name='Mu_Mul')

+kr='Y': (46, 46) (dtype=float32 min=2.000197410583496 max=3.9995388984680176)

{'Y': array([[3.419556 , 2.5125964, 2.6644747, ..., 2.2184076, 3.9621744,

2.5592406],

[3.822313 , 3.8818226, 2.3897986, ..., 3.829173 , 3.9068456,

3.6176982],

[2.5244346, 2.5971172, 2.0926566, ..., 2.8305347, 3.1156988,

2.5614848],

...,

[2.336208 , 3.693772 , 2.6583784, ..., 2.9779284, 3.9650922,

2.4234152],

[2.973298 , 3.8292289, 2.6124973, ..., 3.71129 , 2.1220028,

3.7119985],

[3.939609 , 3.942213 , 2.0477579, ..., 2.6146688, 2.7894301,

2.039695 ]], dtype=float32)}

With onnxruntime.

sess = InferenceSession(onx.SerializeToString(),

providers=["CPUExecutionProvider"])

y_cpu = sess.run(None, {'X': x})[0]

Execution on GPU#

If available…

if get_device().upper() == 'GPU':

dev = get_ort_device('cuda:0')

try:

gx = C_OrtValue.ortvalue_from_numpy(x, dev)

cuda = True

except RuntimeError as e:

print(e)

cuda = False

else:

cuda = False

if cuda:

sessg = InferenceSession(onx.SerializeToString(),

providers=["CUDAExecutionProvider"])

io_binding = sessg.io_binding()._iobinding

io_binding.bind_input(

'X', dev, numpy.float32, gx.shape(), gx.data_ptr())

io_binding.bind_output('Y', dev)

sessg._sess.run_with_iobinding(io_binding, None)

y_gpu = io_binding.copy_outputs_to_cpu()[0]

assert_almost_equal(y_cpu, y_gpu)

Benchmark#

data = []

shapes = ([(n, n) for n in [10, 100, 1000]] +

[(n, 100) for n in [10, 100, 1000, 10000]] +

[(100, n) for n in [10, 100, 1000, 10000]])

slices = [([1, 1], [-1, -1]), ([1], [-1]), ([None, 1], [None, -1])]

shape_slices = [(sh, sl) for sh in shapes for sl in slices]

for shape, slices in tqdm(shape_slices):

onx = build_ort_op(slices=slices)

x = numpy.random.rand(*shape).astype(numpy.float32)

number = 100

if x.size >= 100000:

number = 10

sess = InferenceSession(

onx.SerializeToString(),

providers=["CPUExecutionProvider"])

sess.run(None, {'X': x})

obs = dict(

shape=str(shape).replace(

" ", ""), slice=str(slices).replace(

" ", ""))

r = measure_time(lambda: sess.run(None, {'X': x}),

number=number, div_by_number=True,

context={})

obs.update(r)

obs['provider'] = 'CPU'

data.append(obs)

if cuda:

def sess_run(sess, io_binding, x, dev):

io_binding.bind_input(

'X', dev, numpy.float32, gx.shape(), gx.data_ptr())

io_binding.bind_output('Y', dev)

sess._sess.run_with_iobinding(io_binding)

io_binding = sess.io_binding()._iobinding

sess = InferenceSession(

onx.SerializeToString(),

providers=["CUDAExecutionProvider"])

dev = get_ort_device('cuda:0')

gx = C_OrtValue.ortvalue_from_numpy(x, dev)

sess_run(sess, io_binding, gx, dev)

obs = dict(

shape=str(shape).replace(

" ", ""), slice=str(slices).replace(

" ", ""))

r = measure_time(

lambda: sess_run(sess, io_binding, io_binding, gx, dev),

number=number,

div_by_number=True,

context={

'sess': sess, 'gx': gx, 'io_binding': io_binding,

'dev': dev, 'sess_run': sess_run})

obs.update(r)

obs['provider'] = 'GPU'

data.append(obs)

df = DataFrame(data)

print(df)

0%| | 0/33 [00:00<?, ?it/s]

6%|6 | 2/33 [00:00<00:02, 11.29it/s]

12%|#2 | 4/33 [00:00<00:02, 10.78it/s]

18%|#8 | 6/33 [00:00<00:02, 9.75it/s]

21%|##1 | 7/33 [00:01<00:06, 4.27it/s]

24%|##4 | 8/33 [00:01<00:07, 3.17it/s]

27%|##7 | 9/33 [00:02<00:09, 2.46it/s]

33%|###3 | 11/33 [00:02<00:05, 3.76it/s]

39%|###9 | 13/33 [00:02<00:04, 4.88it/s]

42%|####2 | 14/33 [00:02<00:03, 5.42it/s]

45%|####5 | 15/33 [00:03<00:03, 5.91it/s]

48%|####8 | 16/33 [00:03<00:02, 6.44it/s]

52%|#####1 | 17/33 [00:03<00:02, 6.71it/s]

55%|#####4 | 18/33 [00:03<00:02, 7.24it/s]

58%|#####7 | 19/33 [00:04<00:04, 3.49it/s]

61%|###### | 20/33 [00:04<00:04, 2.81it/s]

64%|######3 | 21/33 [00:05<00:05, 2.24it/s]

70%|######9 | 23/33 [00:05<00:02, 3.53it/s]

76%|#######5 | 25/33 [00:05<00:01, 4.75it/s]

79%|#######8 | 26/33 [00:05<00:01, 5.30it/s]

82%|########1 | 27/33 [00:05<00:01, 5.87it/s]

88%|########7 | 29/33 [00:06<00:00, 6.98it/s]

91%|######### | 30/33 [00:06<00:00, 7.38it/s]

94%|#########3| 31/33 [00:06<00:00, 3.86it/s]

97%|#########6| 32/33 [00:07<00:00, 3.05it/s]

100%|##########| 33/33 [00:07<00:00, 2.40it/s]

100%|##########| 33/33 [00:08<00:00, 4.12it/s]

shape slice ... context_size provider

0 (10,10) ([1,1],[-1,-1]) ... 64 CPU

1 (10,10) ([1],[-1]) ... 64 CPU

2 (10,10) ([None,1],[None,-1]) ... 64 CPU

3 (100,100) ([1,1],[-1,-1]) ... 64 CPU

4 (100,100) ([1],[-1]) ... 64 CPU

5 (100,100) ([None,1],[None,-1]) ... 64 CPU

6 (1000,1000) ([1,1],[-1,-1]) ... 64 CPU

7 (1000,1000) ([1],[-1]) ... 64 CPU

8 (1000,1000) ([None,1],[None,-1]) ... 64 CPU

9 (10,100) ([1,1],[-1,-1]) ... 64 CPU

10 (10,100) ([1],[-1]) ... 64 CPU

11 (10,100) ([None,1],[None,-1]) ... 64 CPU

12 (100,100) ([1,1],[-1,-1]) ... 64 CPU

13 (100,100) ([1],[-1]) ... 64 CPU

14 (100,100) ([None,1],[None,-1]) ... 64 CPU

15 (1000,100) ([1,1],[-1,-1]) ... 64 CPU

16 (1000,100) ([1],[-1]) ... 64 CPU

17 (1000,100) ([None,1],[None,-1]) ... 64 CPU

18 (10000,100) ([1,1],[-1,-1]) ... 64 CPU

19 (10000,100) ([1],[-1]) ... 64 CPU

20 (10000,100) ([None,1],[None,-1]) ... 64 CPU

21 (100,10) ([1,1],[-1,-1]) ... 64 CPU

22 (100,10) ([1],[-1]) ... 64 CPU

23 (100,10) ([None,1],[None,-1]) ... 64 CPU

24 (100,100) ([1,1],[-1,-1]) ... 64 CPU

25 (100,100) ([1],[-1]) ... 64 CPU

26 (100,100) ([None,1],[None,-1]) ... 64 CPU

27 (100,1000) ([1,1],[-1,-1]) ... 64 CPU

28 (100,1000) ([1],[-1]) ... 64 CPU

29 (100,1000) ([None,1],[None,-1]) ... 64 CPU

30 (100,10000) ([1,1],[-1,-1]) ... 64 CPU

31 (100,10000) ([1],[-1]) ... 64 CPU

32 (100,10000) ([None,1],[None,-1]) ... 64 CPU

[33 rows x 11 columns]

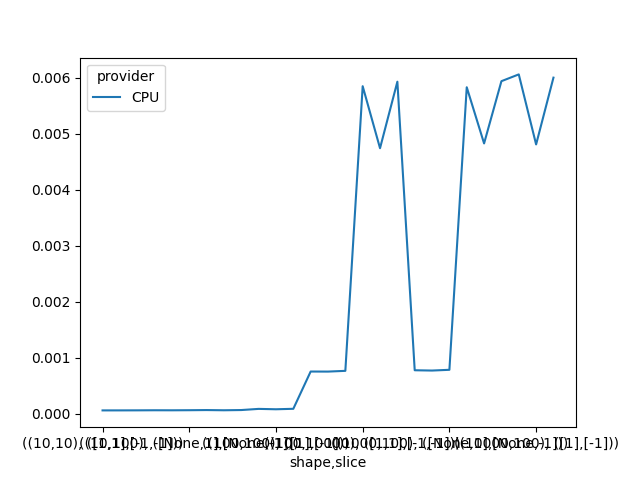

Better display#

piv = pivot_table(

df, index=["shape", "slice"], columns="provider", values="average")

if 'GPU' in piv.columns:

piv['ratio'] = piv['GPU'] / piv['CPU']

print(piv)

provider CPU

shape slice

(10,10) ([1,1],[-1,-1]) 0.000061

([1],[-1]) 0.000061

([None,1],[None,-1]) 0.000062

(10,100) ([1,1],[-1,-1]) 0.000063

([1],[-1]) 0.000063

([None,1],[None,-1]) 0.000064

(100,10) ([1,1],[-1,-1]) 0.000067

([1],[-1]) 0.000063

([None,1],[None,-1]) 0.000067

(100,100) ([1,1],[-1,-1]) 0.000088

([1],[-1]) 0.000081

([None,1],[None,-1]) 0.000089

(100,1000) ([1,1],[-1,-1]) 0.000755

([1],[-1]) 0.000754

([None,1],[None,-1]) 0.000767

(100,10000) ([1,1],[-1,-1]) 0.005852

([1],[-1]) 0.004744

([None,1],[None,-1]) 0.005932

(1000,100) ([1,1],[-1,-1]) 0.000778

([1],[-1]) 0.000773

([None,1],[None,-1]) 0.000786

(1000,1000) ([1,1],[-1,-1]) 0.005833

([1],[-1]) 0.004830

([None,1],[None,-1]) 0.005942

(10000,100) ([1,1],[-1,-1]) 0.006063

([1],[-1]) 0.004812

([None,1],[None,-1]) 0.006005

Graphs.

piv.plot()

<AxesSubplot: xlabel='shape,slice'>

Total running time of the script: ( 0 minutes 9.562 seconds)